QUALITY ASSURANCE

Ensure the highest standard of labeling

Avoid mistakes and don’t lose time with multi-level quality assurance process.

Perfect training data

Large labeling workforce requires professional tools — and we have them.

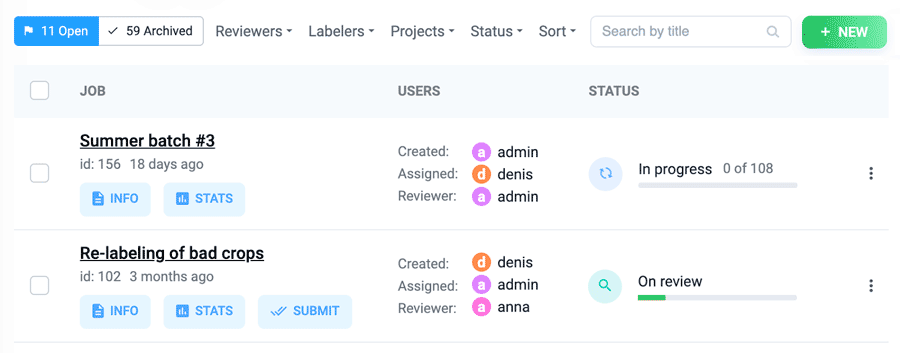

Annotation at scale with labeling jobs

No large labeling could be done working alone. Go big with hundreds of people working simultaneously with Supervisely! Split large annotation work into smaller batches called labeling jobs based on multiple criteria, like presence of a specific tag, object class or divide dataset between several labelers.

Provide job description with markdown, select whether labelers can see and edit each other annotations and choose which classes and tags are available for this job.

Labelers with get notifications that a new labeling job is available and be able to check-in when ready. Track labeling progress, improve output in a multi-step review process and get valuable insights of your current status.

Multi-step reviews

When a labeling job is marked as completed by annotator, that does not necessary means it’s over. Usually, results need to be verified by either an expert, a labeling manager or other annotator.

In Supervisely you can manually or automatically assign a reviewer who will be notified each time a new labeling job is marked completed and can mark good and bad examples and leave feedback using built-in issue tracking.

When review is done, a new labeling job will be automatically created containing bad examples and the process will repeat, until you end up with perfect training data.

Labeling consensus

Improve your training data by labeling the same assets more than once by different people, who don’t see each other labels and tags.

Decide which annotation output preserve manually reviewing results side-by-side with the help of an expert or automatically compare annotations using score metrics like Intersection over Union (IoU).

Enforce labeling requirements

Avoid labeling errors before annotation even started.

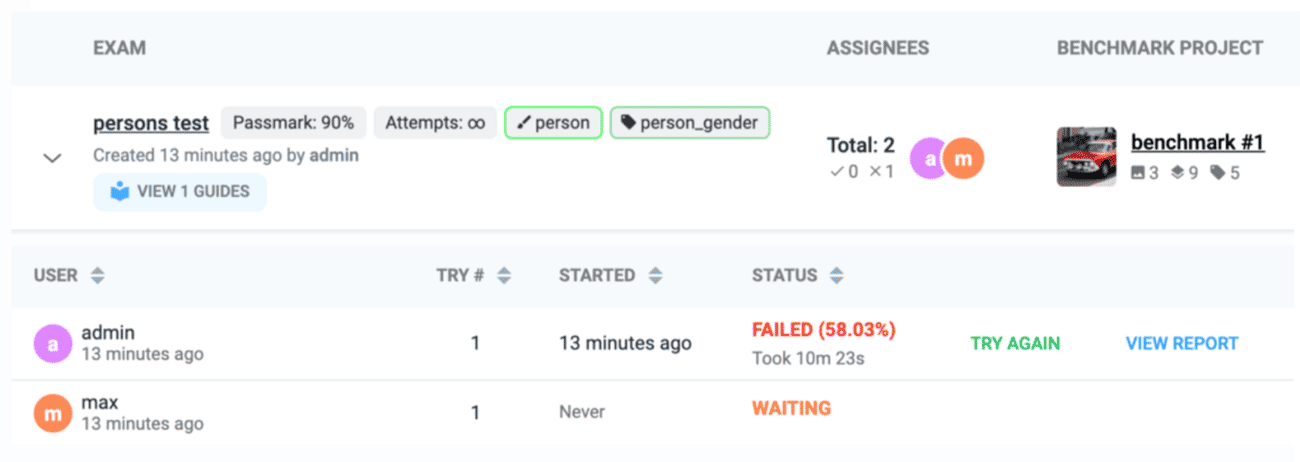

Test how well your labelers understand the task with exams

Avoid unnecessary mistakes in labeling by requiring your labelers pass annotation exam first. See if annotation guides have been learned — select a perfectly labeled ground truth benchmark dataset, hidden from your labeling team and people you want to test.

After labeling an empty dataset each person will get an annotation quality score and detailed report with descriptive comparison of expected an actual output.

Additionally, put a quality score threshold and how many attempts available - and your labelers will be required to try the exam until the quality score needed is met to quarantine great labeling results.

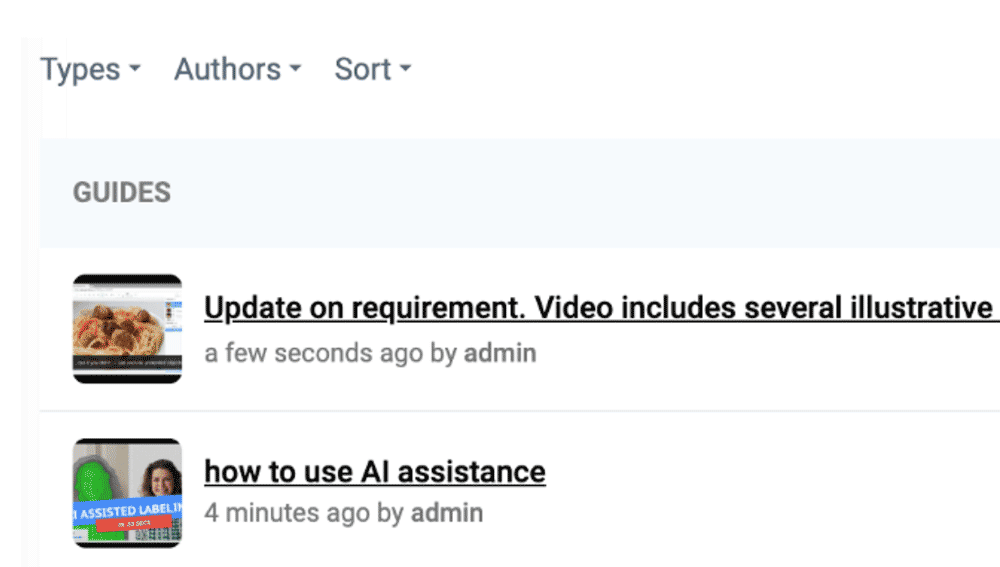

Annotation guides

Not every annotator properly understands labeling requirements from the day one and often makes systematic errors.

Ensure your annotation policy is well presented and acknowledged with annotation guides that include rich text content via markdown, videos and pdf documents, available for the specific team or everyone.

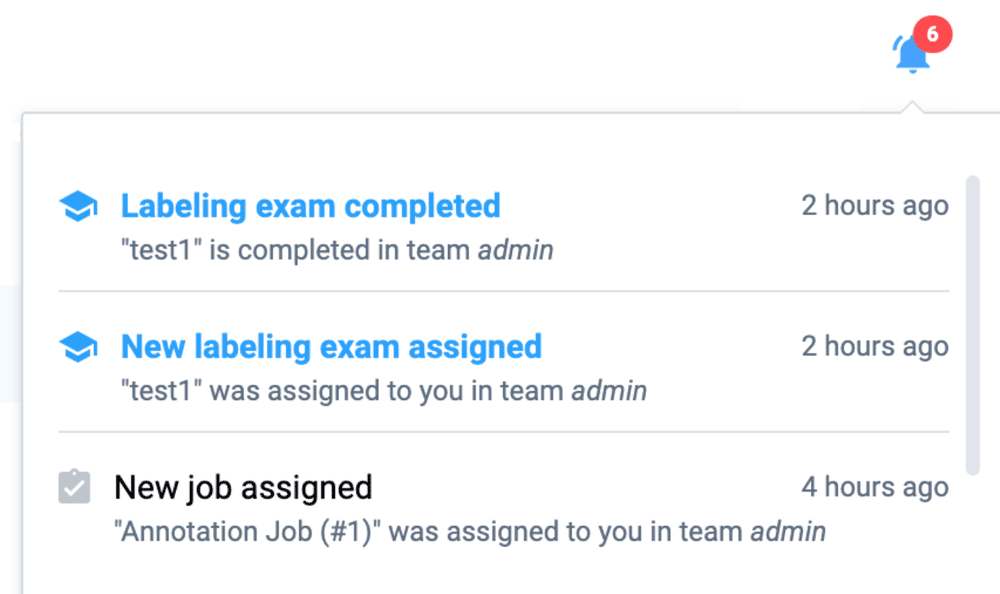

Notifications for your team

Don’t miss relevant information and stay informed of what’s going on.

Get notifications in your Supervisely dashboard, an email or web-hook event when you are assigned a new labeling job for annotation or review or when you receive a new feedback in issue tracking.

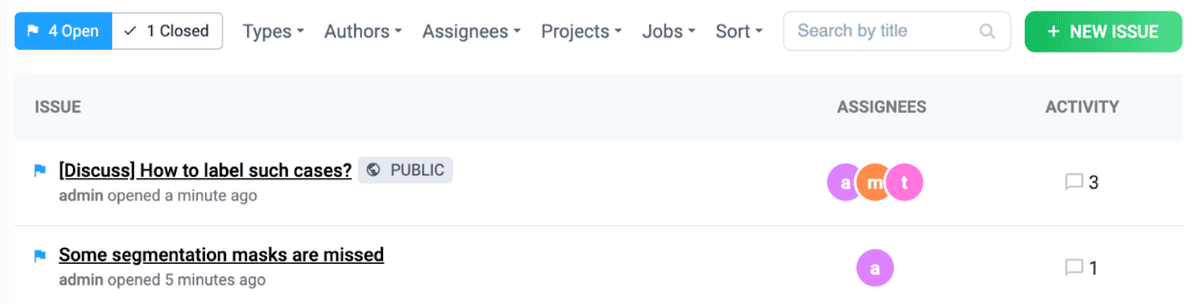

Resolve issues in style

Mistakes happen, but, with the right tool, you can learn from them and prevent in the future.

Guarantee quality with issues tracking

Built in cooperation with professional labeling teams and inspired by GitHub issues, Supervisely issue tracking is specially designed for annotation at scale that requires high standards of labeling.

Create and inspect issues on invalid assets and objects, discuss and resolve them with your team in both labeling suite and overview dashboard.

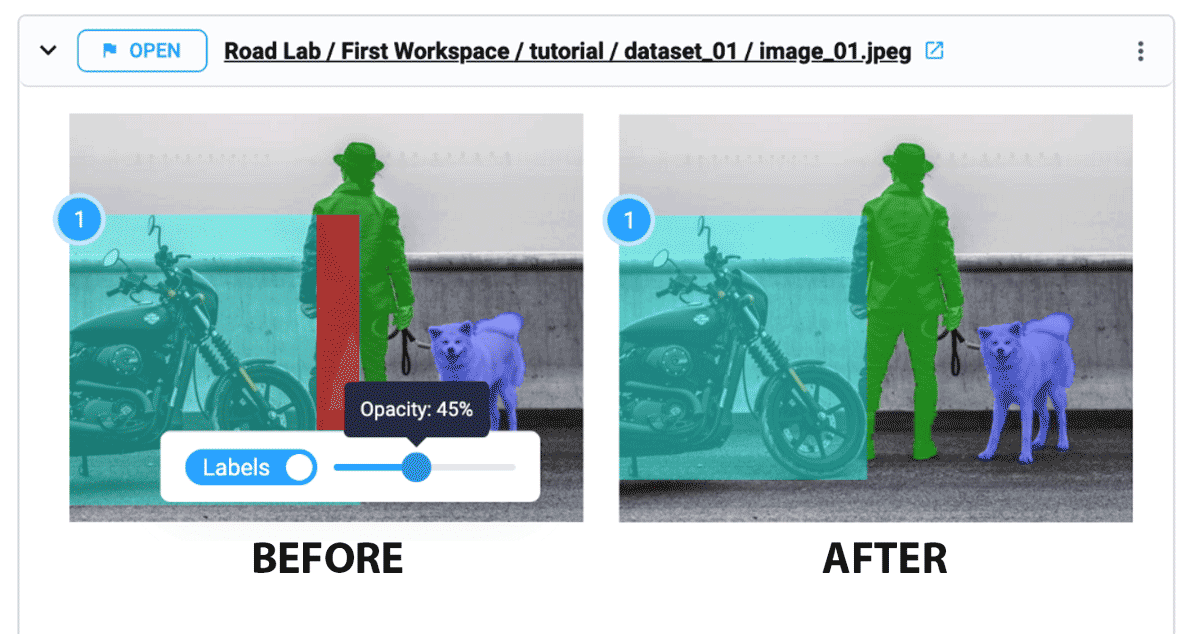

Track how mistakes becomes fixes

Issue tracking in Supervisely is not just another tracking system, because it’s focused specifically on annotation. Every labeling object can be discussed and resolved separately.

Managers and reviewers can easily track changes by comparing the differences between initial and final labels.

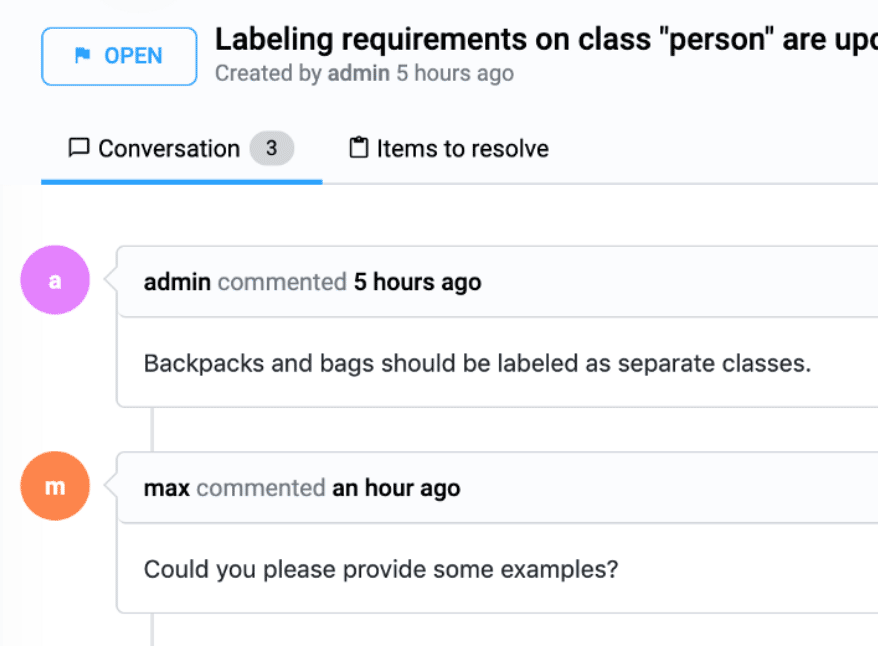

Discuss issues and find solutions

When something needs collaborative attention of multiple people like managers, domain experts, data scientists, in house and external labelers over time, you need to keep conversation under control.

Supervisely provides a convenient way to discuss labeling issues in a single place with connection to actual labels.

More of Supervisely

And that’s not it!

Data management

Easy import and export, workspaces, backups, data insights and statistics.

Learn moreUser collaboration

Collaborate with your team to transform existing assets into labeled data.

Learn moreSecurity & access

Protect your data and users with ACL, permissions and other security features.

Learn moreSUPERVISELY FOR ENTERPRISES

On-premise edition built for your business

A fully customizable AI infrastructure, deployed on cloud or your servers with everything you love about Supervisely, plus advanced security, control, and support.

Start 30 days free trial➔- Maximum security: hosted behind firewall on your servers with advanced governance and privacy settings

- Effortless integrations: single sign-on with LDAP or OpenID, cloud storage in AWS or Azure and powerful API & SDK

- Priority support: dedicated slack chat, guided onboarding and personalized training sessions with experts

> downloading pre-requirements...

> pulling docker images...

> installing software...

> Done! Supervisely is running on port :80

supervisely update

> checking for updates...

> Your version is up to date!

Here’s why our customers trust us

8

years

Supervisely provides first-rate experience since 2017, longer than most of computer vision platforms over there.

100,000+

users

Join community of thousands computer vision enthusiasts and companies of every size that use Supervisely every day.

1,000,000,000+

labels

Our online version has over a 220 million of images and over a billion of labels created by our great community.

Trusted by Fortune 500. Used by 100,000+ companies and researchers worldwide

Contact Us

Ready to get started?

Speak with people who are on the same page with you. An actual data scientist will:

- Show live demo

- Go through the concepts

- Learn your case

- Offer a tailored solution

Get you data labeled

Get accurate training data on scale with expert annotators, ML-assisted tools, dedicated project manager and the leading labeling platform.

Order workforce