How to run OpenAI CLIP with UI for Image Retrieval and Filtering your dataset

How to use text prompts and AI to search and query relevant images in your training datasets.

Table of Contents

Many companies have large data collections with thousands or even millions of images. And the task of image retrieval becomes very important when working with huge training datasets in Computer Vision.

In this guide, you will learn what is the image retrieval task (search, filter and query images) and how to use tools from 🧩 Supervisely Ecosystem based on OpenAI's CLIP model to sample images from your computer vision datasets automatically based on the text prompt.

Video tutorial

In this video we explain how to use the OpenAI CLIP model in Superevisely to filter custom datasets in 5 simple steps with the user-friendly GUI:

-

Run Supervisely App "Prompt-based Image Filtering with CLIP" from the context menu of your project with images.

-

Select a dataset.

-

Define a text prompt.

-

Sort and browse the most relevant images based on the similarity between the text query and image content.

-

Manage a dataset by defining the threshold to filter and move images to another dataset or project.

What is Image Retrieval?

Image retrieval is a computer vision task of browsing, searching, filtering and querying from large datasets of images. Nowadays Neural Networks are used for this task, especially in the cases where the images are unlabeled. The most popular example is Google Image Search. A user just provides text query (text prompt) and image retrieval system searches and shows the most relevant images in the entire database. Sometimes it is also called a semantic image search engine.

Automatic tools for smart image filtering and querying can be effectively used during Computer Vision research. For example, in training data annotation. Images can be subsampled based on the text prompt to quickly find unique edge cases and improve training data diversity. The CLIP model from OpenAI is a golden standard for these tasks.

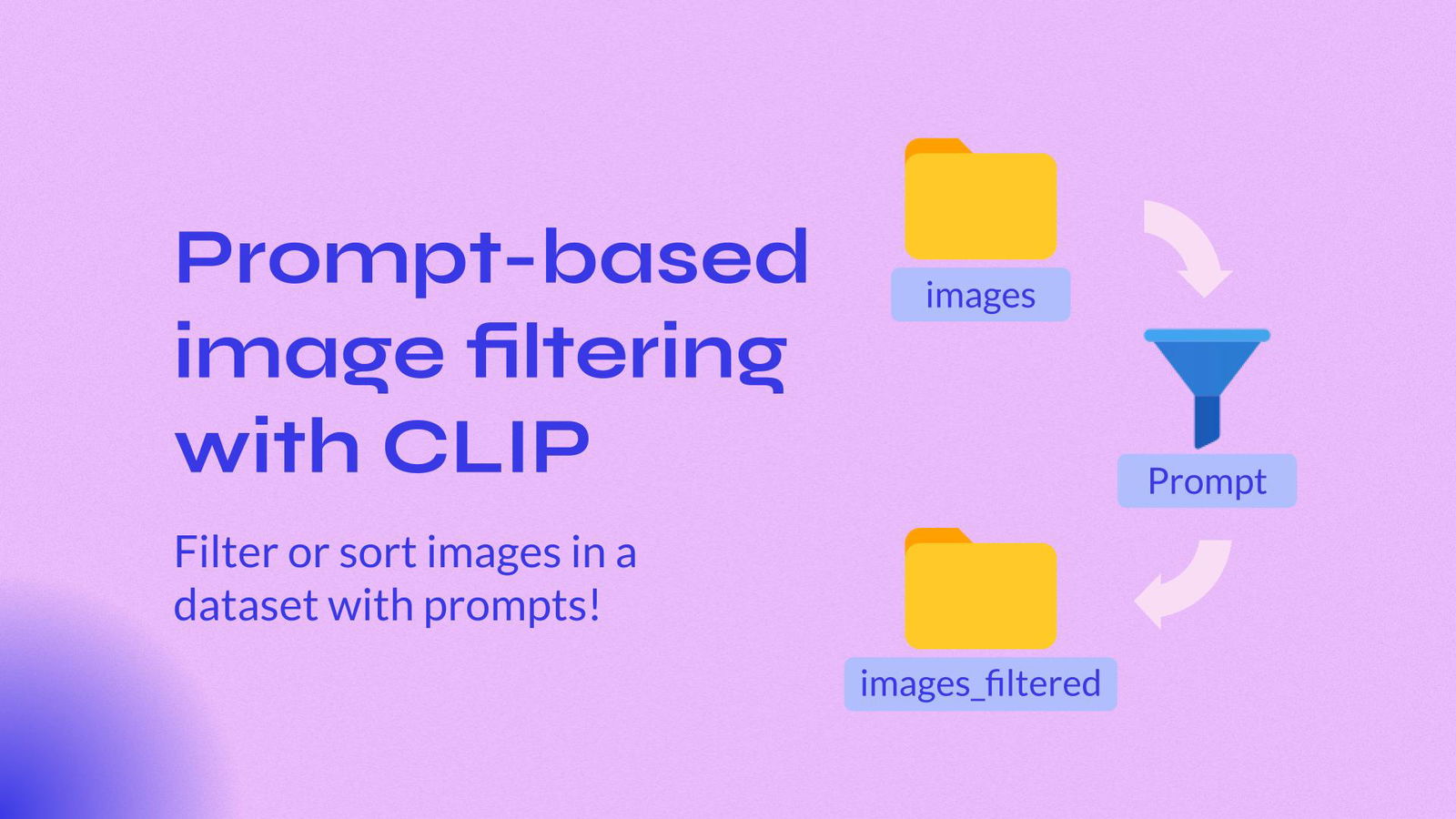

Simple scheme chat explains how Image Retrieval systems work

Simple scheme chat explains how Image Retrieval systems work

What is the OpenAI CLIP model?

OpanAI's CLIP (Contrastive Language-Image Pre-Training) is one of the most influential foundation models that is used as a core component in recent AI breakthroughs including DALLE and Stable Diffusion. CLIP is an open-sourced neural network trained on the 400 million (image, text) pairs collected from the internet. It offers high performance on classification benchmarks in a "zero-shot" scenario where the model can be used without the training of finetuning step.

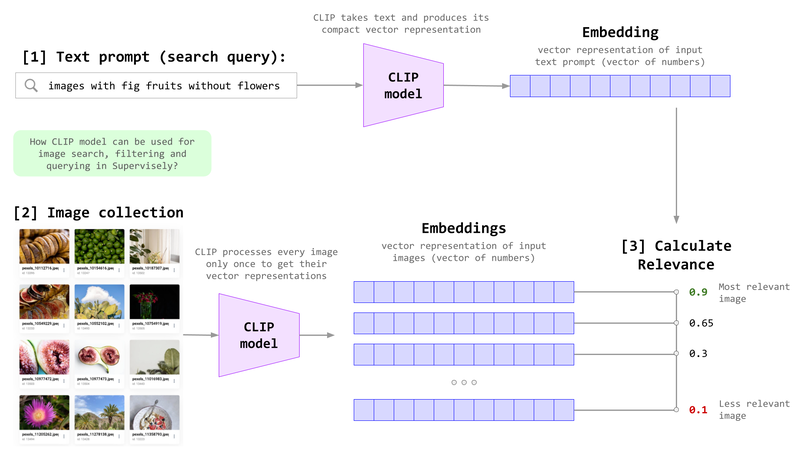

Here is a simple explanation of how the CLIP model is used for searching and filtering relevant images in Computer Vision datasets. The CLIP model takes text as input and produces the embedding (the vector of N numbers). Also, it can take images as input and again produce the embeddings. So the model outputs the embedding from the input text or image.

What does that mean? Embeddings allow us to compare similarity scores between texts and/or images. Mathematically, the CLIP model just smartly maps (transforms) texts and images to points in N-dimensional space. Thus we can calculate the distance between them - how close these points are to each other. Often, this distance is called a relevance score - how the pair text-text, image-image or text-image are relevant to each other. Sometimes it is called confidence - how the model is confident that the text and image (input pair) are relevant to each other and represent the same concept.

Image retrieval with CLIP model. This scheme is used in Supervisely App ("Prompt-based Image Filtering with CLIP") for filtering and querying images in custom datasets.

Image retrieval with CLIP model. This scheme is used in Supervisely App ("Prompt-based Image Filtering with CLIP") for filtering and querying images in custom datasets.

This model of course is not a silver bullet. Usually, CLIP produces accurate predictions on recognizing common objects on the pictures. But it struggles with more abstract concepts and systematic tasks. For example, CLIP performs poorly on finding the distance between two objects on an image, counting the number of cars and pedestrians in a photo or doing text recognition (OCR) in general.

The CLIP model and its weights are often used as a core part of other Neural Network architectures because its weights contain a lot of knowledge about the world and showed effectiveness in transfer learning.

How to use CLIP for image filtering?

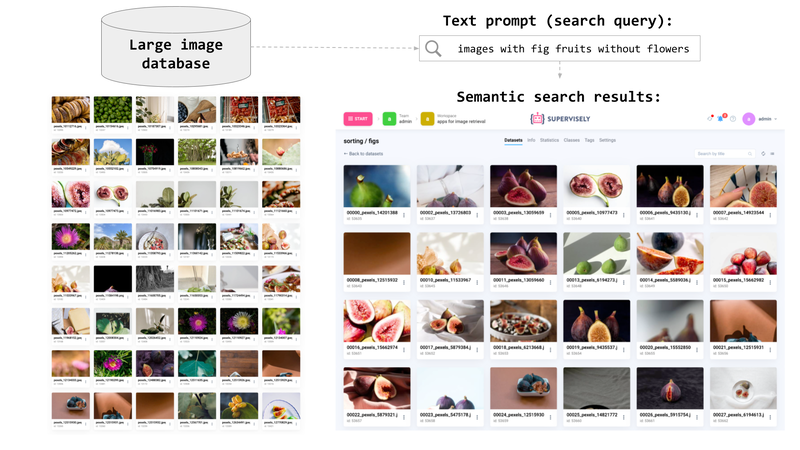

Prompt-based Image Filtering with CLIP

Filter and rank images by text prompts with CLIP models

We designed and implemented this Supervisely App - Prompt-based Image Filtering with CLIP with GUI so you can use the CLIP model in a few clicks.

-

Initial step. You need to connect your computer with GPU to your account if you haven't done it yet. Watch the video guide on how to do that (MacOS, Ubuntu, any Unix OS or Windows).

-

Run the app from a context menu of your project or directly from Supervisely Ecosystem.

-

Define text prompt and run calculations

-

Browse search results and the most relevant images

-

Configure filtering settings and export results to another dataset.

Conclusion

The CLIP model from OpenAI can be used as an efficient instrument for working with your computer vision training datasets. We at Supervisely integrated this great foundation model into Supervisely Ecosystem and enhance it with a user-friendly GUI so now you can leverage it in your Computer Vision research in just a few clicks. Follow this guide and video tutorial and try the Supervisely App based on the CLIP model yourself in our Community Edition for free!

Supervisely for Computer Vision

Supervisely is online and on-premise platform that helps researchers and companies to build computer vision solutions. We cover the entire development pipeline: from data labeling of images, videos and 3D to model training.

The big difference from other products is that Supervisely is built like an OS with countless Supervisely Apps — interactive web-tools running in your browser, yet powered by Python. This allows to integrate all those awesome open-source machine learning tools and neural networks, enhance them with user interface and let everyone run them with a single click.

You can order a demo or try it yourself for free on our Community Edition — no credit card needed!